Long gone are the days where networking labs were all physical. Nowadays, even the virtual cables are connected to other layers of virtual cables. Virtual labs are best used in combination with hands on hardware, to contextualise especially if a visual learner, it also depends on the area of networking being studied.

Virtualisation is not new, it comes in many forms. The first hypervisor was in IBM CPU in swinging 60s, virtual LANS in the 90s, then stateless servers, to where we are now with decoupling with SR-IOV with virtual network functions (VNFs) with overlays, controllers and beyond in an architectural reference framework.

Since the beginning of the last decade, virtual labs GNS3, EVE and VIRL started to become the usual weapons of choice, when labbing up network architecture.

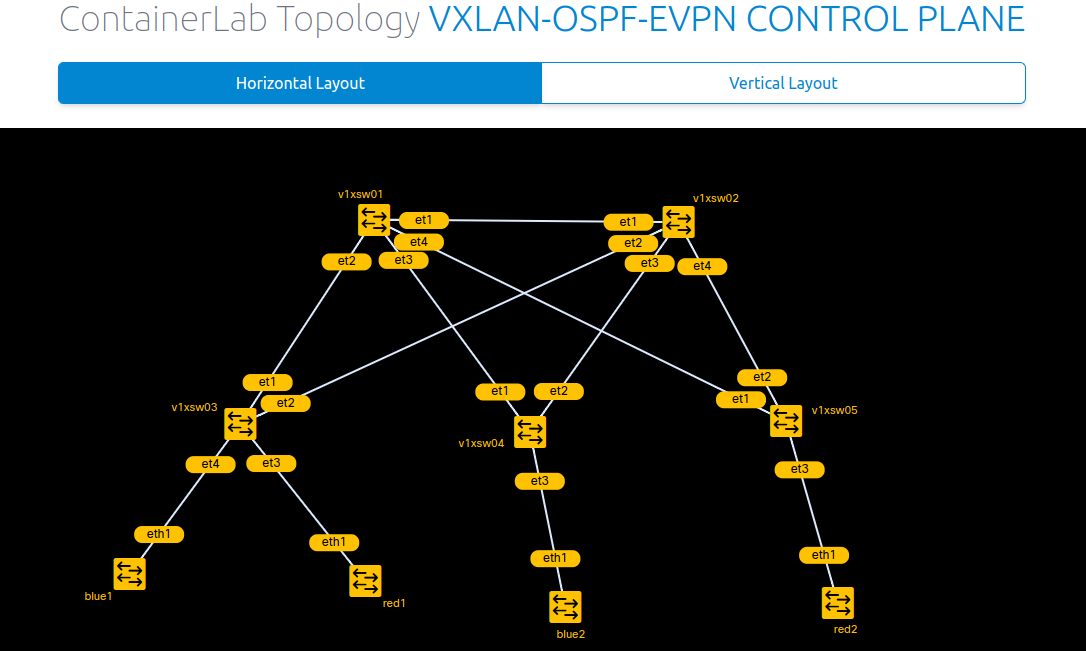

Lately, I have been using these in conjunction with Linux Netlab (formerly known as Netsim). It is brilliant, and uses Vagrant (by same makers of Teraform) and the libvirt API, which is great to utilise the Kernel Virtual Machine (KVM) module where hardware meets userspace with qemu. Combined with containerlab (docker) for workstations/graph, few JSON attributes for toplology with management and orchestration tools based on YML playbooks and jinja2 scripts for configuration, and we can spin up a 10 device SDN/VNF such as MPLS Segment Routing (SR) and/or VxLAN with EVPN control plane pod in a matter of minutes ready for testing.

Netlab uses UDP tunnels between virtual bridges. This can be trunked externally to cloud/sites.

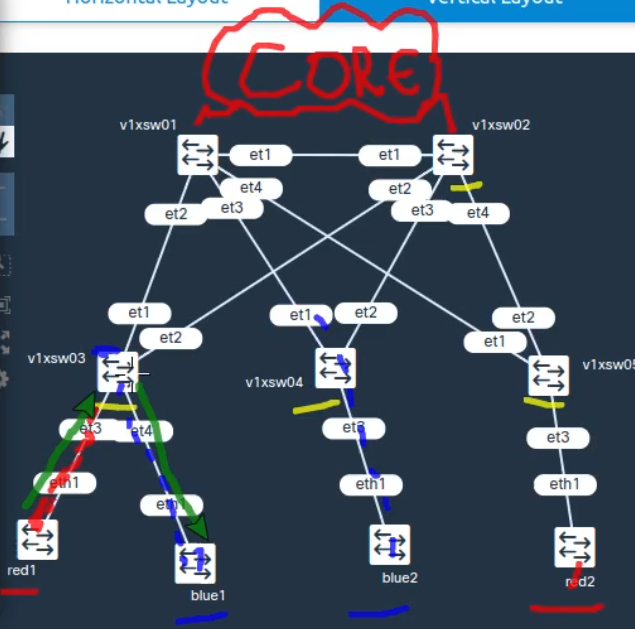

The CLOS topology (originally from telephone network), is typically the best design for data centre fabrics, due to ECMP and scalability. In some sense, with an overlay, in this example, as an analogy, you could consider the 2 spines as SUPS and 3 leafs as fabric module line cards, as if it is just one big virtual clustered switch.

We have a lab where

1) Underlay control plane L3 tunnelling is OSPF with iBGP signalling

2) Overlay data-plane is VxLAN

3) Overlay control-plane is eVPN

Centralised Integrated Routing and Bridging (IRB) if most traffic is inter rack or north/south

aIRB (Distributed asymmetrical IRB) MAC-VRF L2VNI

sIRB (Distributed symmetrical IRB) /32 host for IP-VRF L3VNI

____________________________________________________________________